*

*

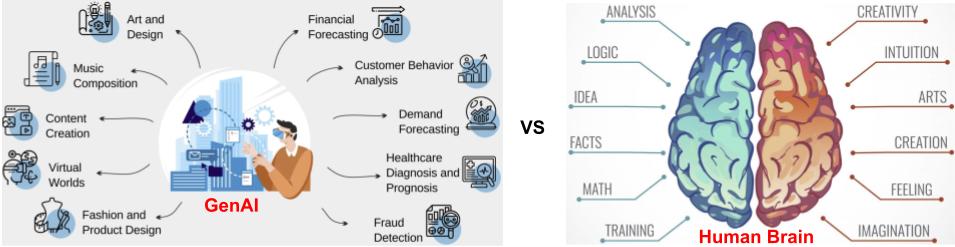

We know, human brain also generates new and meaningful data! Say, via, random / directed thoughts, imagination, intuition, dreams, and so on.

Two big and interesting questions are - How do they compare with each other? (which one is better ?!) What can the two systems of data generation learn from each other?

We try to answer these KEY QUESTIONS in this article.

Comparing Generative AI and Human Brain

State-of-the-art GenAI systems are based on artificial neural networks (ANNs). ANNs are inspired by human brain (i.e., neuron mesh) - This makes the key questions addressed in this article LOT MORE interesting!These ANN-based GenAI tools learn from a vast amount of data (at times, like whole of internet), typically in a pre-processing / training step. ANNs can be typically viewed as large graph networks storing condensed & processed form of the data they have been exposed to (for example, at times, like whole of internet). During the data generation process (aka inference), "input prompts" are being used (as a guide) to pull out new kind of data from this "stored" form. They learn the precise "math" to do so in the training step. Note, that the kind and nature of the dataset used for training the ANNs is crucial. As ANNs are NOT a magic box or some sort of Aladdin's Chirag. They can only generate something new "based" on what already existed in the dataset used for training. For example, for DALL-E (a popular GenAI image generator) to be able generate a new dog image, one had to ensure the training dataset used to trian DALLE had ample images / examples of dogs in various forms (i.e., poses, breeds, sizes, etc).

Like a graph neural network used by a modern day GenAI instance, human brain also uses a graph network of "neurons" (BNN) to learn and engage in creativity / cognition via learned information. Specifically, learned "stuff" is stored in the "connections" (aka synapses) between neurons. Brain does not have one but multiple specialized "neural networks", linked to one or more tasks (i.e., facial recognition, thought generation, etc). For instance, Default Mode Neural Network is linked to spontaneous, internally directed thoughts, such as daydreaming or mind-wandering. Cognition (the mental action or process of acquiring knowledge and understanding through thought, experience, and the senses) happens by coordinated activity of one of more specialized neural networks.

A BNN can be triggered, to perform its intended task, by some sensory input (i.e., visual image, physical touch) or some internal process (such as thought). This is analogous to the inference phase of ANNs. Much like an ANN, inputs are received by a set of neurons, in a BNN, known as sensory neurons and interneurons. Input signal from a neuron is transformed (dictated by "learned" information stored at synapse) and transmitted to a neighboring neuron, in the BNN, to which it is connected via synapse. This process of transmission continues from one receiving neuron to another recipient neuron, in it's neighborhood, via the synaptic connection between them. The overall process can somewhat be likened to a wave propagation, across the BNN.

As signals flow through a brain neural network, the resulting flow pattern can potentially represent an outcome (i.e, a perception, memory, or a thought). This is analogous to the inference phase of ANNs. Usually, multiple brain neural networks are involved in a task, such as face recognition. As a task is being performed, signals propagate from one neural network to another via a set pathway. For instance for visual tasks, visual sensory inputs are first sent to the Primary Visual Cortex Neural Network. Here, basic visual features such as orientation, edges, and motion are detected. The resulting wave of signals is then received by multiple adjacent neural networks, which are responsible for a variety of sub-tasks ranging from depth processing to motion perception. Resulting wave of signals arrives at the temporal lobe neural network which finally performs face recognition.

Likewise, also happens thought generation (talking of CONTENT CREATION !) - As mentioned, for a task (such as perception, emotion, memory, and decision-making) there would be a group of neural networks arranged in a neural pathway. These groups of neural networks work in an integrated way to produce thoughts. Dreams and thoughts share some underlying neural mechanisms. Dreams specifically originate from random neural activity that occurs during REM sleep, according to one theory. In the process, the neural networks involved also incorporate information from reality, waking experiences, and imagination to eventually create a coherent narrative (aka dream).

So, given both GenAI and human brain can do content creation, how do they compare with each other? Which one is better? - It depends on the task! Compared to a human brain, for many tasks, GenAI systems can produce a vast amount of data / output, much faster and much more consistently. For example, drawing a million different human pictures, using DALL-E. But also, in many tasks, GenAI cannot perform as well as a human brain. See this article for details on which kind of tasks. In short, anything towards "GENERAL intelligence", GenAI has a hard time matching a human brain.

What Can Human Brain Learn from GenAI?

Given, key similarities between ANNs and the human brain (at the foundational level) and enormous success of GenAI in recent times, can human brain learn anything from GenAI? I believe so - in-fact a lot - such as:- Exposure - A modern day Gen AI system, based on ANN, is only as good as the data it has seen. A powerful modern day GenAI, such as ChatGPT is trained on virtually the whole of Internet! Studies (1, 2, 3, 4) also confirm that, like ANNs, we should also be hungry for data / information / knowledge! The more we have been exposing ourselves to knowledge, the better we can get at creativity. So, your mama was right - learn, learn, learn ... study, study, study!

- Recursive Prompt Engineering (RPE) Prompt Engineering (PE) is now one of the hottest topics in the AI world! It turns out - the quality of data created by GenAI depends A LOT on input prompts.

Badly / Un-intelligently crafted inputs can lead to ambiguous / overly-broad / non-concise responses. Even a good input can often be improved, leading to much higher quality of output. For instance, consider "Summarize the article about climate change" vs "Please provide a 3-4 sentence summary focusing on the main causes and potential impacts of climate change discussed in the article."

In general, trying to provide more context and specificity to input can result in a much more desirable output.

Hence, PE has emerged a key sub-domain in the field of generative AI. The need for PE is linked to a very popular notion in the field of computer science: "Garbage in and garbage out" - quality of output is closely linked to quality of input.

And the joke is - LLMs have a lot of knowledge stored in them, but they are still not GOD - yes, they cannot read your mind!

And, often it's recursive PE (RPE) - Where we recursively follow-up with the GenAI model output with subsequent prompts (typically with more contexts / clarifications), which can lead to further refinement of outputs / more clarifications.

Now, obviously RPE is very relevant to the human brain. As, CLEARLY, it seems closely linked to "(inter-personal) communication skills" which also play a major factor in one's success in life! This should motivate one to seriously consider the possibility of learning useful tips, from the hot domain of RPE in AI, to improve one’s own communication skills! Not just inter-personal related but also in relation with internal-dialogues / self-reflections / self-brainstorming sessions.

It is also possible to provide “prompts” to dreams! Successful prompting about a topic during sleep onset is directly related to increased post-sleep creativity on that topic!!! So, definitely exploit dream-prompting - Targeted Dream Incubation (TDI) is a technique do so. - Connections between nodes in an ANN are key as well as critical. This is where learned information is stored. A lot of key ANN related research focuses on how to design new and better connections. Likewise, the neural networks in the brain are constantly making / adjusting connections between the neural nodes (also in response to continuous sensory inputs from the outside world). This enables the brain to keep learning and growing in understanding, and enhance cognitive abilities in general. So, given connections in neural networks (both in AI & human brain) play such a critical role and researchers keep making them better for AI, we should be inspired to help our brain make new and better neural connections! And, we can indeed do so - thanks to neuroplasticity! Studies show following endeavors help us to make new and better connections in brain (via neuroplasticity), resulting in better cognitive abilities:

- Mental Stimulation

- Learn continuously and acquire new skills regularly

- Engage in activities that challenge the brian. Such as, puzzles, learning new languages, playing musical instruments, engaging in strategy games

- Physical Exercise, Stress Management, Sleep

- Regular exercise (aerobics as well as anaerobics) promotes brain health & performance in various ways including better blood circulation which also promotes growth of new neurons

- Adequate sleep & staying hydrated

- Keep stress under control. Practices like mindfulness meditation, deep breathing exercises, and relaxation techniques can help manage stress, promoting neural connectivity. Regular meditation has been associated with increased gray matter in the brain and improved cognitive functions

- Diet - Intake Healthy, Avoid Unhealthy

- A balanced diet with ample antioxidants, vitamins, minerals, and healthy fats promote brain health & performance

- Reduce or avoid intake of harmful substances such as tobacco, alcohol, and recreational drugs

- Maintain a healthy & meaningful social life

- Brian training Programs - In recent times, several brain training apps have emerged, that claim to improve cognitive skills, such as problem-solving, memory, attention, and flexibility. Examples include Luminicity, CogniFit, Peak, Elevate, BrianHQ, Mensa Brain Training, NeuroNation, and Fit Brain Trainer. Investigation is underway in the scientific community to assess their effectiveness.

- Mental Stimulation

- Transfer learning is a big and popular domain in the field of AI and ANNs. It is about taking a pre-existing AI model, trained for a task, and adapting it to perform a related task. This is contrary to training a fresh new AI model from scratch which would otherwise incur a LOT MORE development cost. This key technique from AI should inspire us too, to make a better use of our brain neural networks. Specifically, to be strategic, as we go about our daily learning, making the overall learning process lot more faster and efficient, helping to build up our skillsets more easily. For instance:

- Learning piano can make learning another musical instrument easier.

- As you plan to acquire riding and biking skills, it is recommended to learn in the following order:

- Riding a Bicycle: Start with a bicycle. This provides foundational skills in balance, coordination, and basic road awareness. Bicycling also helps develop physical coordination and an understanding of road safety, such as looking for traffic and signaling turns.

- Riding a Motorcycle: After mastering a bicycle, transitioning to a motorcycle introduces motorized control while still emphasizing balance and road awareness. Riding a motorcycle shares similarities with bicycling in terms of balance and maneuvering, but it adds the complexity of engine control, including throttle, clutch, and gears.

- Driving a Car: Learning to drive a car builds on the road awareness and traffic understanding developed through cycling and motorcycling. It introduces the concept of driving a larger vehicle with different control mechanisms (steering wheel, pedals) and typically provides a safer, enclosed environment. Car driving also emphasizes spatial awareness and understanding of vehicle dimensions.

- Driving a Truck: Finally, driving a truck builds on the skills learned in car driving, adding complexity in terms of size, handling, and potentially more complex mechanical systems. Trucks often require a deeper understanding of vehicle dynamics, such as handling a larger turning radius, different braking systems, and managing longer stopping distances.

- Learning one language can not only make learning additional ones easier and can also improve cognitive abilities (including problem solving skills, memory, and attention)! (1, 2, 3, 4)

- Problem solving skills - Critical thinking and problem-solving strategies learned in one discipline, such as computer science, can be applied to other fields, like business or engineering.

- Math & Physics - Skills learned in mathematics, such as algebraic manipulation, can be transferred to solving physics problems where similar equations are used.

- Sports - An athlete trained in one sport may find it easier to pick up another sport that uses similar physical skills, like hand-eye coordination or strategic thinking.

- Art & design - Skills in one form of art, such as drawing, can aid in learning another, like painting, because of the shared principles of composition, color theory, and perspective.

- Teaching - Educators often transfer classroom management skills or instructional strategies from one subject or age group to another, adapting them as needed.

- Cooking - Techniques and knowledge from one type of cuisine can help in learning and experimenting with another, due to similarities in cooking methods or ingredients.

These are just some illustrations of how to manage your learning agendas more smartly and efficiently, saving a lot of time and resources! The underlying principles in these illustrations is what your should apply in your learning agendas. -

RAM (Random Access Memory) in artificial neural networks (ANNs) is critical as it temporarily stores information for immediate use such as program instructions, data inputs, model weights, and other voluminous runtime intermediates. Likewise, "short term memory" (STM) in human brain temporarily stores information needed for immediate use. For example, remembering digits in a phone number you are trying to dial, what you are about to say in a conversation, remembering your next steps in an ongoing problem solving endeavor, etc. STM is crucial for everyday cognitive tasks.

Having a better (i.e., faster and / or bigger) RAM can enable handling of bigger ANNs, bigger data, and more parallel processing, potentially making both model training and inference faster. This goes a long way towards scalability of tasks, which is a big deal (read about ChatGPT). Likewise, better short term memory increases productivity in everyday cognitive tasks, including understanding new concepts, learning new skills, problem solving, making decisions, communicating, and multi-tasking.

So, how do we improve our short term memory?! All of the recommendations in section … apply here … In addition to those, according to ChatGPT:- Stay Organized: Keep your environment tidy and minimize distractions. An organized workspace can help you focus better and reduce cognitive load.

- Chunking: Break down large amounts of information into smaller, manageable chunks. This makes it easier to remember and recall.

- Repetition and Review: Repeatedly practice recalling information. Reviewing material at spaced intervals can help reinforce memory.

- Mnemonics: Use mnemonic devices, like acronyms or visualization techniques, to link new information to existing knowledge in a memorable way.

- Use Multiple Senses: Engage different senses when learning new information. For example, reading aloud, writing things down, or using visual aids can help reinforce memory.

- Machine learning models (core of GenAI) usually incur two big challenges:- OVERFITTING and UNDERFITTING. Overfitting happens with GenAI, if it was "over-trained" - meaning, post training, it performs well on the training dataset but often not on inputs which are not part of the training dataset. Underfitting happens with GenAI, it is "under-trained" - meaning, post-training, it does not even perform well on the dataset used for training. This is a warning for Human Brain as well :) That is, in humans -

- Overfitting is said to occur when someone becomes overly specialized in a particular area, learning to recognize and respond to very specific patterns that might not generalize well to new situations. For instance, a chess player might become extremely good at a particular opening but struggle with variations or unexpected moves. Fortunately, following remedies can help:

- Broadening Experiences: Engaging in diverse activities and learning experiences can help. For example, a chess player might study different types of games, puzzles, or strategic thinking exercises outside of chess to enhance general cognitive abilities. Also, deliberately seeking out new and varied experiences can prevent over-specialization. Traveling, meeting new people, and trying different hobbies can expand one's perspective.

- Mindfulness and Reflection: Regularly reflecting on one's thought processes and decisions can help identify overly rigid patterns. Mindfulness practices can aid in recognizing when one is stuck in a particular mode of thinking.

- Underfitting is said to occur when a person fails to grasp the complexity of a situation, often due to insufficient knowledge or experience. For instance, a novice cook might not understand the subtleties of flavor combinations and cooking techniques, resulting in simplistic and bland dishes. Fortunately, following remedies can help:

- Deep Learning: Encourage focused and in-depth study in areas of interest or importance to build a robust understanding.

- Seeking Feedback: Actively seeking feedback from more knowledgeable individuals can provide insights into areas that need improvement. Constructive criticism from experienced chefs can help a novice cook understand where they are going wrong.

- Engagement in Deliberate Practice: Focusing on specific areas that are challenging can lead to significant improvements. A cook might repeatedly practice particular techniques or dishes to develop expertise.

- Continual Learning: Promote lifelong learning to keep skills and knowledge up to date.

- Overfitting is said to occur when someone becomes overly specialized in a particular area, learning to recognize and respond to very specific patterns that might not generalize well to new situations. For instance, a chess player might become extremely good at a particular opening but struggle with variations or unexpected moves. Fortunately, following remedies can help:

- VOLUME - Is bigger the better in the world of neural networks? Could be, as suggested by the huge global impact of large language models, such as ChatGPT. Then, can we continue to add more neurons to our brain neural networks? This is currently an activie area of research - "neurogenesis". While the human brain primarily undergoes neurogenesis during development, research has shown that certain areas of the adult brain, such as the hippocampus, continue to produce new neurons throughout life. Continued study is needed to shed more light on the topic of "neurogenesis" as well as how to intentionally promote it. It is still believed that the tips (listed in Section "Connections" - such as exercise, diet, stress management, mental stimulation, etc) to help promote "neuroplasticity" can also promote "neurogenesis".

What Can GenAI Learn From Human Brain?

So, what can GenAI learn from it's "inspiree" and the master creator - the Human Brain? Well, as stated earlier, modern-day GenAI are ANNs, under the hood, which are already inspired by the Human Brain. But still, the structure and workings of the human brain remain far more complex. And, we still do not understand A LOT of it. Meaning, as we find out more and more about the human brain, via research, the potential for ANNs to better itself will increase. Some of it is expected to be in the areas related to general intelligence, where modern-day ANNs greatly lag behind. Again, these are possible because ANNs were inspired by the human brain and as a result there are key similarities between ANNs and the human brain, at the foundational level, which in turn, potentially enables ANNs to keep learning from the Human Brain in the future. Some key areas where GenAI is still learning from the Human Brain:- Energy Efficiency: Human Brain operates on ~20W - hence consuming less energy than a light bulb!!! Hence, obviously, Human Brain is considered to be a LOT MORE energy efficient than GenAI core - ANNs (A single run of one known GenAI core - GPT-4 - consumes 30,000 watt-hours of energy, even though it has lot less neural nodes and connections). Research, in the ANN domain, is currently underway to mimic the high level of energy efficiency in human brains. For instance, spiking neural networks (SNNs) are being explored, which only consume energy when they spike (inspired by the human brain).

- Human Brain is primarily hardware and ANNs are primarily software. This could be a reason for human brian to be lot more efficient and powerful than ANNs in many ways (such as energy efficiency, general intelligence). This should also inspire ANN researchers. In-fact, research is already underway to port more and more parts of ANN into primarily hardware implementations.

Final Take

Given that the ongoing AI revolution is considered so impactful that it is touted as next the Industrial Revolution and the fact that modern day AI (which is based on ANNs) is inspired by the Human Brain, it was really important to do a deep investigation on the two questions: (1) What can the Human Brain learn from AI / GenAI (2) What can AI / GenAI learn from the Human Brain? That is what is being attempted in this article.One very important final take from my side:- AI / GenAI and the Human Brain need not compete with each other. BUT, at the very least, they should work together. For example, are you using the latest and greatest AI has to offer, as of today, to help with your life and work? If yes, then it will turbocharge your life (A CTO friend just remarked to me: “ChatGPT is like hiring a junior engineer”!) If no, then not only are you missing out big but also risking falling behind / out in life & work. Read more here: "How Do I Save My Job From AI ?!"

(ChatGPT was used to compose parts of this article)

(* Image adapted from Neebal and Cognifit)